Mt. Saint-Michel

With summer holidays approaching, we decided to go on some explorations around France.

After a stay in Rennes, we finally went to Mont Saint Michel, this famous floating castle in the ocean sky.

archive:

tags:

With summer holidays approaching, we decided to go on some explorations around France.

After a stay in Rennes, we finally went to Mont Saint Michel, this famous floating castle in the ocean sky.

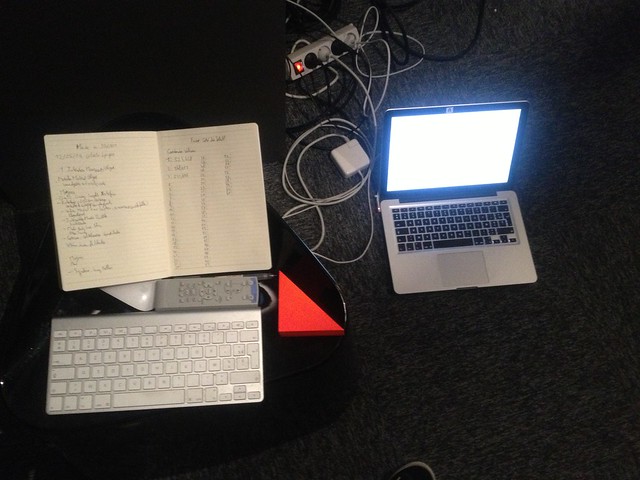

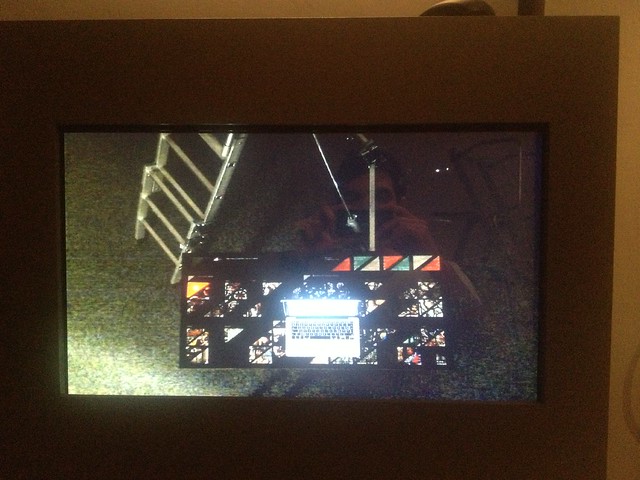

I recently worked for “La Cité du Vitrail” in french city called Troyes on an interactive installation. The original installation wasn’t working anymore and as the original developer was currently living abroad I was asked to debug it or eventually develop a whole new software.

The setup is quite simple, a webcam tracks the position on a red pointer in real time, a stained-glass window is then projected in the chapel whenever the pointer is put upon the corresponding one on the surface of the table.

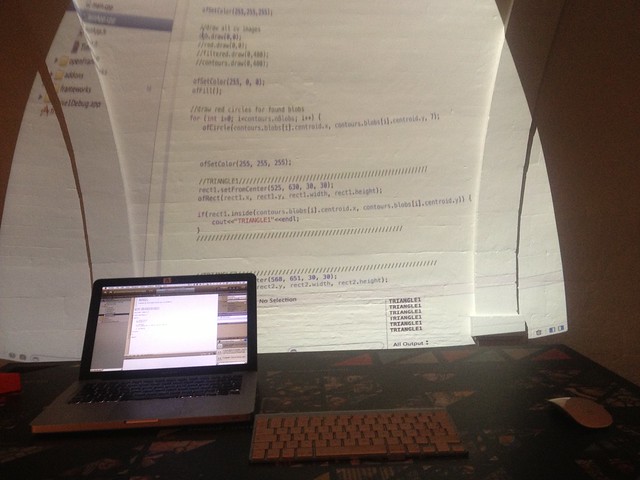

The original program was made with Processing, one application was dedicated to the detection of the pointer and another one was dealing with the display of the correct stained-glass window. Those two applications communicates via server sockets, leading to some signal loss at random interval. On top of that, the camera detection was pretty sensitive to the ambient luminosity of the space, that’s why the program wasn’t working always as expected.

I first attempted to make just one Processing application but it wasn’t stable enough after the debugging part. Even though I had only 4 days to make it for the inauguration of the new collection, I decided to build an entirely new software in C++ with openFrameworks. I used the OpenCV library for detecting blobs from the camera image and assigned coordinates for each of the 61 triangles represented on the table. When the pointer is above the right coordinates, it triggers an animation with the ofxTween library, displaying the stained-glass window.

The installation is now stable and it only needs one application for all of the processes.

During my last stay in Bretagne I went to LiFE, an ancient dutch submarine basement reconverted in a contemporary art space. The architecture offers interesting perspectives with repetitive patterns. Walls reflecting in the water of the garden on the rooftop are like an infinite corridor to the sky.

I was lucky enough to spend some time in the installation of Jeppe Hein, “DISTANCE”. Like graphically and sculpturally composed roller-coasters with a series of white balls circluating on them, the installation plays with perception of space and time. The structure must have been through a long process of trial and error, the physics behind each xof part of the circuit enabling the balls to follow their path without any malfunction.

Bends, turns, curves, uphill/downhill slope, loops. A ball going through obstacles, following its trajectory, from start to end. Enjoy the walk.

Wave Field Synthesis & Spherical Harmonics

I went to the 2014 edition of Manifeste festival at IRCAM and attended Fundamental Forces, an audiovisal projection by Robert Henke and Tarik Barri. The whole piece focuses on accelerating motion and attraction between visual and sound structures, complex visual shapes and sonic structures emerge as the result of repeated applications of simple mathematical operations, are transformed and thrown around in real time to form an abstract world of floating objects in deep space. The visual component is based on Tarik Barri’s ‘Versum’ computer animation engine, whilst the auditive part is created by Robert Henke, using real time synthesis modules created in Max MSP, embedded in a Max4Live environment.

Adapted for the venue, the premiere of Fundamental Forces benefited of the Wave Field Synthesis (WFS) and Ambisonics techniques that reconstruct the physical properties of a sound field.

Based on Huygen’s Principle, WFS permits to synthesize “sound holograms” by simulating acoustic waves produced by virtual sound sources. A large number of loudspeakers are regularly spaced and used conjointly, each controlled with a delay and a gain to form preserve the fidelity of the spatial image.

Ambisonics is a method of recording and reproducing 3D sound that represents the spatial dependence of an acoustic field as a combination of basic spatial patterns. The sound undergoes an encoding step made up of an ordered series of components called spherical harmonics.

I had a glimpse of the research going on in the studios, from voice synthesis to IRCAMAX audio plugins and gesture-sound interaction. I discussed about works that’s done internally in web sound visualization/generation using low level WebAudioAPI, throw an eye to the open source code made available on the IRCAM github repo.