if (switchSound == true) {

bip1.play();

ofBackground(255, 255, 255, soundColor);

if (autoCam == true) {

cam.setDistance(soundCamera);

}

}

if (randomModel == 0) {

bipPad.setPaused(false);

noisePad.setPaused(true);

breeze.setPaused(true);

ofPushMatrix();

ofRotate(ofGetElapsedTimef()*25.0, 0, 1, 0);

ringModelWireframe.setScale(10,10,10);

ringModelWireframe.draw(OF_MESH_WIREFRAME);

ofPopMatrix();

ofPushMatrix();

ofRotate(ofGetElapsedTimef()*25.0, 1, 0, 0);

ringModel1.setScale(2, 2, 2);;

ringModel1.draw(OF_MESH_WIREFRAME);

ofRotate(ofGetElapsedTimef()*-25.0, 1, 0, 0);

ringModel2.setScale(4,4,4);

ringModel2.draw(OF_MESH_WIREFRAME);

ofRotate(ofGetElapsedTimef()*25.0, 1, 0, 0);

ringModel3.setScale(6,6,6);

ringModel3.draw(OF_MESH_WIREFRAME);

ofRotate(ofGetElapsedTimef()*25.0, 0, 1, 0);

ringModel4.setScale(8,8,8);

ringModel4.draw(OF_MESH_WIREFRAME);

ofPopMatrix();

crystalModel.setScale(soundScaleModel*1.5, soundScaleModel*1.5, soundScaleModel*1.5);

crystalModel.draw(OF_MESH_FILL);

crystalModel.draw(OF_MESH_WIREFRAME);

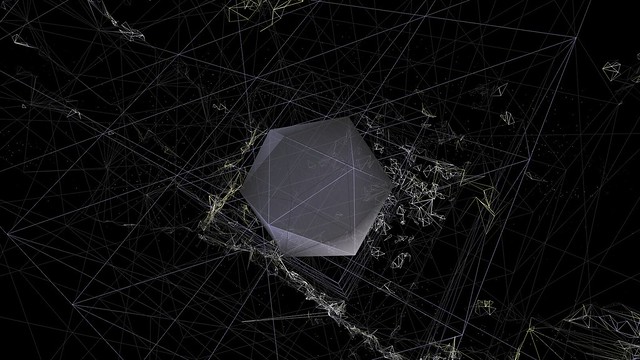

} else if (randomModel == 1) {

bipPad.setPaused(true);

noisePad.setPaused(false);

breeze.setPaused(true);

ofPushMatrix();

ofRotate(ofGetElapsedTimef()*25.0, 1, 0, 0);

cubeModel.setScale(2, 2, 2);;

cubeModel.draw(OF_MESH_WIREFRAME);

ofRotate(ofGetElapsedTimef()*25.0, 0, 1, 0);

cubeModel.setScale(4,4,4);

cubeModel.draw(OF_MESH_WIREFRAME);

ofRotate(ofGetElapsedTimef()*25.0, 0, 0, 1);

cubeModel.setScale(6,6,6);

cubeModel.draw(OF_MESH_WIREFRAME);

ofRotate(ofGetElapsedTimef()*25.0, 1, 0, 1);

cubeModel.setScale(8,8,8);

cubeModel.draw(OF_MESH_WIREFRAME);

ofPopMatrix();

icosaedreModel.setScale(soundScaleModel*1.5, soundScaleModel*1.5, soundScaleModel*1.5);

icosaedreModel.draw(OF_MESH_FILL);

icosaedreModel.draw(OF_MESH_WIREFRAME);

}